Beyond the Buzzwords: What AI Agents Really Mean (and Why PMs Need to Ditch the Hype and Move to Reality)

A No-BS Guide for Product Managers on AI Agents vs AI Assistants

Introduction - The "Agent" Overload - Let's Get Real

Let's talk about AI Agents.

Yes, you've heard the term. It's everywhere. Plastered across startup pitch decks, dominating tech news headlines, and debated by LinkedIn thought leaders.

It promises this incredible future where autonomous AI systems handle complex tasks, freeing us humans up for higher-level thinking.

Sounds fantastic, right? Almost magical. But... it also sounds incredibly fuzzy.

What exactly makes an AI system an "Agent"? How is it different from the AI Assistants like ChatGPT or Gemini we already use?

Honestly, wading through the noise is challenging. Even I had to consciously cut through a dense jungle of jargon to get clarity.

I've seen simple summarization tools suddenly rebranded as "Content Agents" and basic API connectors bizarrely labeled "Integration Agents."

It often feels like we're drowning in marketing slop, making it hard to distinguish genuine innovation from just clever (or desperate) branding.

If I find it confusing, many Product Managers, especially those newer to the AI space, must feel the same way.

Here's the critical part: This confusion isn't just annoying; it's dangerous for us PMs.

Building products based on misunderstood hype leads to predictable disasters: blown budgets, missed deadlines, features that utterly flop, and eroded user trust.

Our core job is to cut through the BS, understand the fundamental capabilities and limitations, and guide our teams to build products that deliver tangible, measurable value. It is not just to chase the latest buzzword.

So, consider this your clear-headed, no-BS guide. We're going to:

Dissect the real difference between AI Assistants and AI Agents.

Introduce a practical framework (Agent Levels) to understand the spectrum of capabilities.

Peek under the hood at how these systems actually work (including Multi-Agent Systems).

Equip you with a "Reality Check" Litmus Test to evaluate claims you encounter.

Let's get grounded and move from hype to reality.

PS: It's your secret AI Agent Product Strategy (and Sanity) guide post

TL;DR (The Busy PM's Summary)

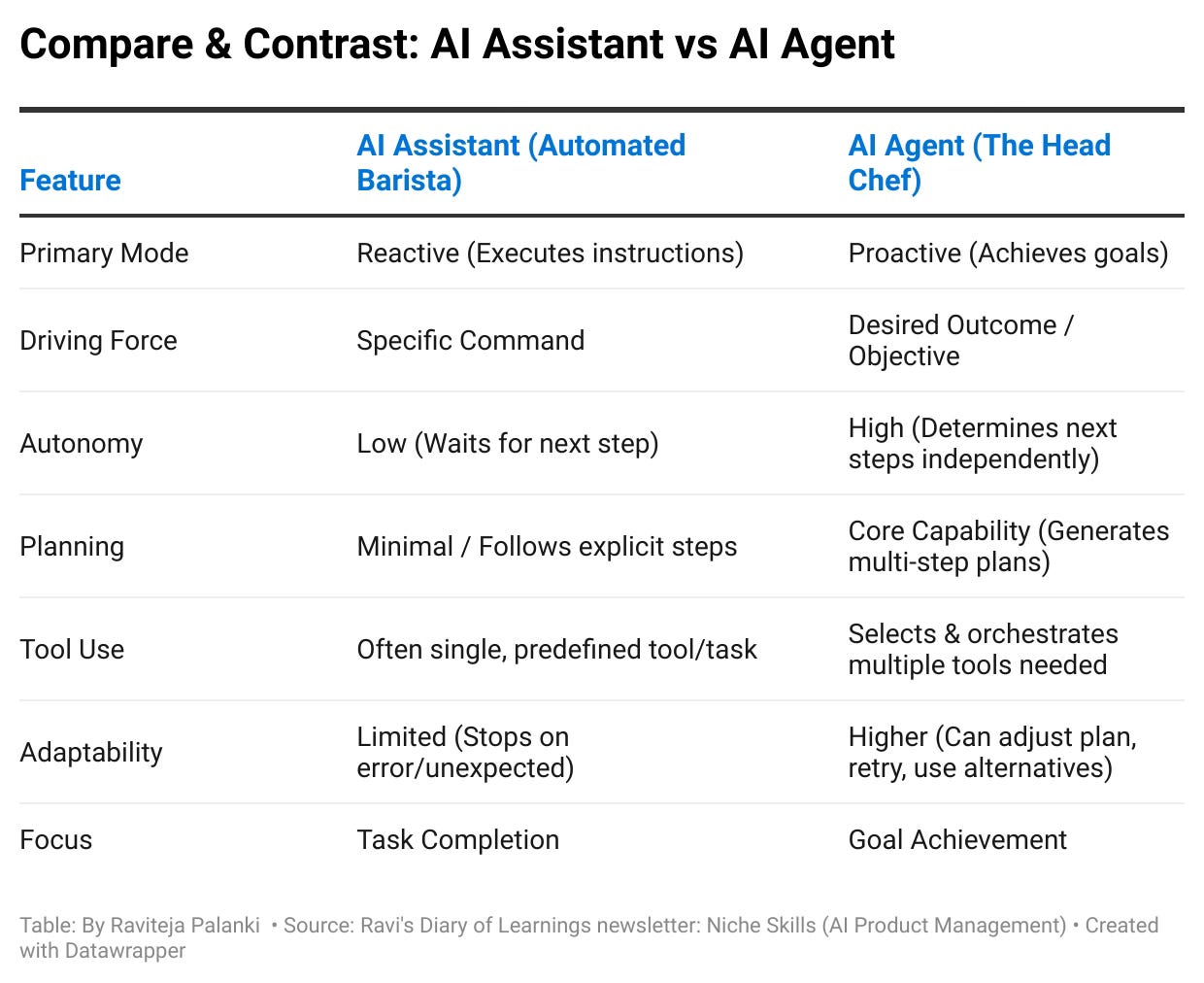

Assistants vs. Agents: Assistants react to specific commands (like ChatGPT executing your prompt). Agents proactively achieve goals (like planning and executing a multi-step task).

It's a Spectrum: Not all "Agents" are equal. We'll use Levels (Task Executor, Workflow Coordinator, Autonomous Strategist) to differentiate capabilities, from simple automation to complex problem-solving.

Beware of "Agent-Washing": Marketing hype is rampant. Many things called "agents" are just capable Assistants or basic automation in disguise. A quick Litmus Test will help you spot the difference.

Why PMs MUST Care: Understanding this distinction is crucial for creating realistic roadmaps, identifying true value, managing complexity and cost, avoiding hype traps, and ultimately building valuable, profitable AI products.

Table of Contents

Introduction: The "Agent" Overload - Let's Get Real

Ground Zero: The AI Assistant - Your Eager but Literal Executor

The Real Deal: AI Agents - Owning the Outcome

Levels of Agent Autonomy: A PM's Framework

Level 1: The Task Executor Agent 🤖 (Simple Goal Automation)

Level 2: The Workflow Coordinator Agent 🧑💼 (Multi-Step Orchestration)

Level 3: The Autonomous Strategist Agent 🧠 (Complex Problem Solving with Multi-Agent Systems)

How Agents Actually Work: A PM Primer ⚙️

The Core Agent Architecture

Connecting Architecture to Agent Levels

Stepping Up: Multi-Agent Systems (MAS)

Recent Advancements & Real-World Examples (Q1 2025 Focus)

Your PM Agent Reality Check: The Litmus Test ✅ ❌

Why This Clarity is Your PM Superpower (Connecting to Value & Profit)

Conclusion: From Hype to High-Value, Profitable Products

Ground Zero: The AI Assistant - Your Eager but Literal Executor

Before we dive into the complexities of Agents, let's firmly plant our feet on familiar ground: the AI Assistant.

Think about the AI tools you likely interact with daily – ChatGPT, Gemini, Claude, maybe even more specialized tools for writing code snippets or summarizing documents based on your prompts. These are powerful examples of AI Assistants.

👉 Concept: AI Assistants are incredibly potent instruction followers. They excel at executing specific, well-defined tasks when you tell them exactly what to do. They are reactive masters of execution within clearly defined constraints.

👉 Analogy: The Automated Coffee Machine Barista ☕

Imagine a super high-end, fully automated coffee machine in a fancy café. It's sleek, fast, and precise.

You walk up and give it precise instructions via a touchscreen: "Make one large oat milk latte, extra hot, with one packet of raw sugar."

The machine follows that sequence perfectly:

Grinds the precise amount of beans (Tool 1: Grinder).

Tamps and pulls the espresso shot (Tool 2: Espresso Maker).

Steam the oat milk to the desired temperature (Tool 3: Steamer).

Combines the espresso and milk (Tool 4: Dispenser).

Adds the single packet of sugar (Tool 5: Sugar Dispenser).

It performs this task flawlessly and efficiently whenever you give it that specific command.

But notice what it doesn't do. It won't:

Suggest a new seasonal pumpkin spice latte, it "thinks" you might like.

Proactively alert the manager that it's running low on oat milk.

Improvise if it runs out of raw sugar (it stops or shows an error).

Engage in small talk about the weather while making your drink.

Plan the café's entire weekly menu or manage inventory across all supplies.

It's brilliant at executing the defined task based on your explicit input, but lacks initiative, planning capabilities, and broader goal awareness. That's your AI Assistant.

🤔 The "Agent-Washing" Trap

Here’s where the marketing hype often derails clarity.

A startup might launch a chatbot that can look up order statuses in one specific Shopify database and excitedly call it an "E-commerce Agent."

Or a tool that drafts cold emails based only on a rigid template you provide and boldly labels it a "Sales Agent."

Be critical.

User Command -> Assistant Executes Task (using Tool 1, Tool 2...) -> Result

If the system primarily reacts to a direct command, performs a narrowly defined task using a fixed process or tool, and doesn't exhibit independent planning or broader goal-setting, it's almost certainly an Assistant, regardless of the fancy "Agent" label slapped onto it.

Unfortunately, many new AI startups, eager for attention and funding in this hot market, engage in misleading "Agent-washing."

Please don't fall for it.

The Real Deal: AI Agents - Owning the Outcome

Now, let's shift gears to AI Agents.

The true paradigm shift lies in this, moving beyond mere instruction following to something much more powerful and autonomous.

👉 Concept:True AI Agents are defined by goal-orientation and autonomous action. You don't just give them a command; you provide them with an objective.

The Agent then figures out the steps, selects the necessary tools, and proactively works towards achieving that outcome, adapting its approach as needed along the way.

👉 Analogy: The Seasoned Head Chef🧑🍳✨

Let's contrast our Automated Barista (the Assistant) with the experienced Head Chef running a world-class, Michelin-starred restaurant.

The restaurant owner doesn't tell the Chef, "Chop five onions, then sear 10 scallops, then whisk the sauce..."

Instead, the owner gives a high-level goal: "Develop and launch a profitable, innovative, and critically acclaimed spring tasting menu using sustainable, locally-sourced ingredients. We need this menu to help us earn (or maintain) our Michelin star."

Notice the difference? It's an outcome, not a list of tasks.

The Head Chef, like a trustworthy Agent, doesn't wait for step-by-step instructions. They:

Plan: Research food trends, design potential dishes, consider ingredient pairings, calculate costs and profitability, and plan the kitchen workflow.

Select & Orchestrate Tools: Source ingredients from unique local farms (Suppliers = Tool A), utilize specialized kitchen equipment (Sous-vide machine (machine for precise cooking) = Tool B, Pacojet (for making smooth purees) = Tool C), and crucially, direct the kitchen team—the Sous Chefs (Execution sub-agents), the Pastry Chef (Specialist sub-agent), and the Sommelier, who knows about wines (Knowledge sub-agent). This team is the Chef's set of tools and collaborators.

Perceive Environment: Monitor ingredient seasonality and quality, track cost fluctuations, gauge early diner feedback on test dishes, and monitor competitor offerings.

Act: Test and refine recipes, train the kitchen and front-of-house staff, oversee plating and service execution.

Adapt: If the planned local asparagus isn't available due to weather, they substitute another seasonal vegetable, adjusting the dish accordingly. If a dish gets poor initial feedback, they tweak or replace it. If costs rise unexpectedly, they adjust sourcing or menu pricing.

The Head Chef owns the outcome—a successful menu launch that meets the complex criteria of innovation, quality, profitability, and critical acclaim.

That's the essence of an AI Agent – autonomous, goal-driven problem-solving.

⚖️ Compare & Contrast: Assistant vs. Agent

Let's put them side-by-side:

Levels of Agent Autonomy: A PM's Framework 🚦

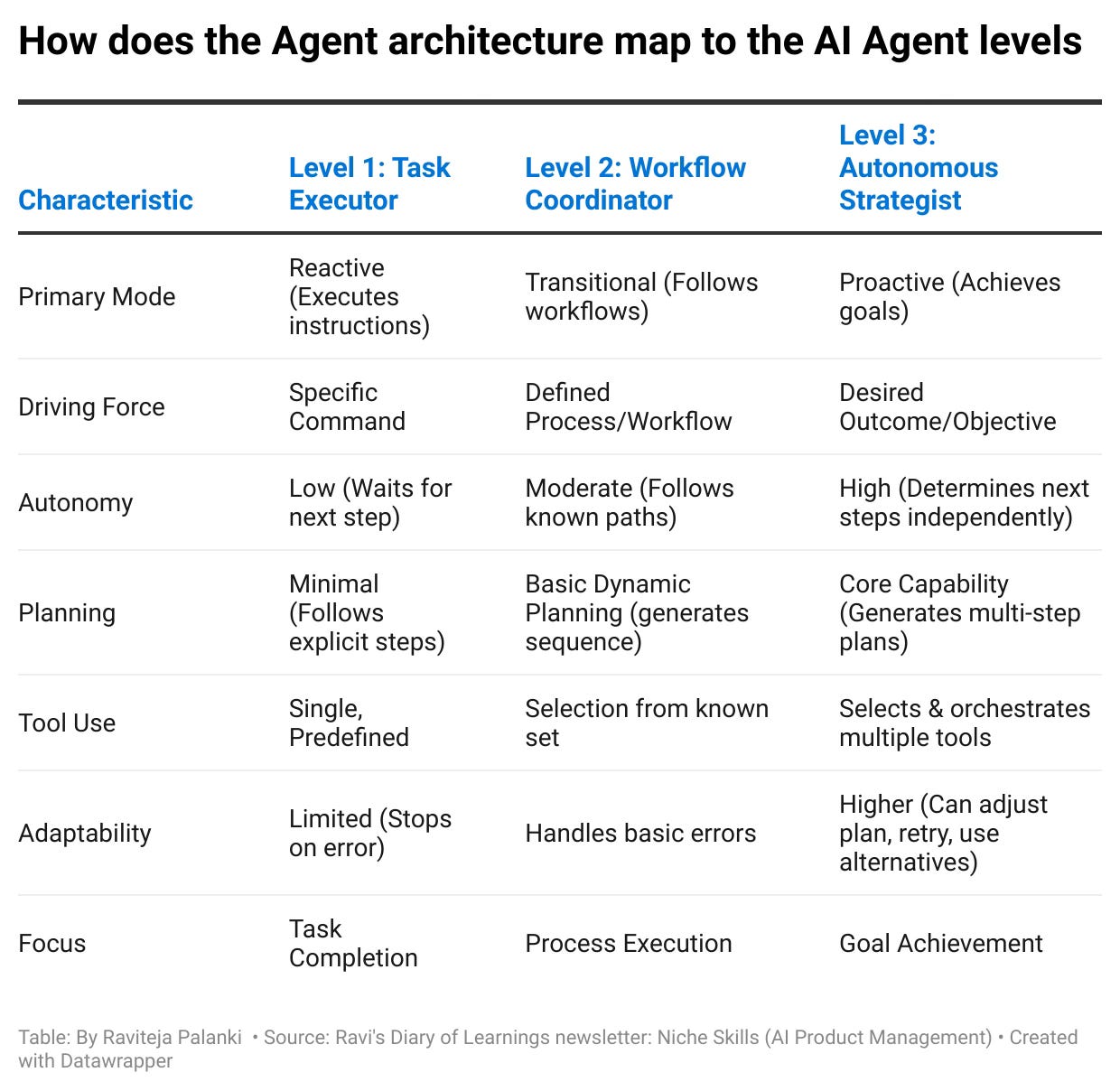

Okay, here's a crucial nuance: "Agent" isn't an all-or-nothing label. Just like cars have different levels of driving automation (from basic cruise control to fully autonomous concepts), AI Agents exist on a spectrum of capability and autonomy.

Thinking in levels helps us, as PMs, cut through the hype, set realistic expectations for our products, and understand what different systems labeled "agents" can actually do.

Let's break it down into three broad, practical levels:

Level 1: The Task Executor Agent 🤖 (Simple Goal Automation)

What it is: This is the most basic form of agent. It takes a specific, well-defined goal and executes a mostly predetermined, linear sequence of steps to achieve it.

It often uses one or maybe two tightly integrated tools.

There's minimal independent planning or adaptation involved.

Think of it as intelligent automation triggered by a goal, a step above just clicking a button in a script.

Example: A basic "Flight Booking Agent."

Goal: "Book the cheapest direct RyanAir flight from Dublin to London Stansted departing next Tuesday morning." (Note the specific constraints).

Action: The agent uses one specific RyanAir API (Tool). It finds the cheapest direct flight matching all the explicit criteria (airline, route, day, time window). It then executes the booking API call.

Analysis: The goal is narrow, the tool is fixed, and the plan is essentially hardcoded within the tool's function. It achieves the goal autonomously after being given the specific parameters, but has little flexibility.

Adaptation: Typically these wouldn’t adapt if something unexpected occurred, like the API returning an error or if the user’s preferences changed mid-task.

AI Assistants vs Level 1 Agents

AI Assistants and Level 1 agents share similarities in that both execute specific tasks based on direct user commands, but they differ in terms of autonomy and flexibility.

Agents, even at Level 1, can have a defined goal and follow a sequence of actions to achieve it. They might understand the parameters of the goal more flexibly than a typical Assistant. They can execute a pre-defined task in a more structured manner without explicit step-by-step instructions from the user but their scope of action is still limited and often pre-determined.

Capability Rating (Illustrative):

Goal Orientation: ★★☆☆☆ (Handles simple, explicit, narrow goals)

Autonomy: ★★☆☆☆ (Executes a sequence autonomously, but little decision freedom)

Planning: ★☆☆☆☆ (Follows a fixed or straightforward, template-like plan)

Tool Use: ★★☆☆☆ (Uses one or maybe two predefined, tightly coupled tools)

Adaptability: ★☆☆☆☆ (Basic error flagging, minimal recovery/adjustment)

🔑 Key Takeaway:

Best suited for automating highly specific, repeatable tasks where the goal and process are crystal clear.

Think of the reliable execution of a known, simple workflow.

Low flexibility is the trade-off.

Level 2: The Workflow Coordinator Agent 🧑💼 (Multi-Step Orchestration)

What it is: This agent tackles broader goals requiring multiple distinct steps and coordinating different tools or information sources.

It performs basic planning (breaking the goal down into a sequence), selects the appropriate tool from a known set for each step, executes the workflow, and can handle some common errors or make minor adjustments along the way.

Example: A "Social Media Content Generation Agent."

Goal: "Take the key findings from our latest market research report (PDF uploaded) and generate draft posts for Twitter, LinkedIn, and a blog summary."

Plan:

Parse PDF & Extract Key Findings (Tool: Document Parser/Extractor).

Generate three distinct tweet drafts summarizing findings (Tool: Text Generation API + Twitter Constraints).

Generate a professional LinkedIn post draft discussing implications (Tool: Text Generation API + LinkedIn Style).

Generate a 300-word blog summary draft (Tool: Text Generation API).

Tool Selection: Uses appropriate text generation prompts/APIs tailored for each platform's style and length constraints (choosing from its known set of generation tools).

Adaptation: If the PDF parsing fails, the user might be asked to highlight key sections. If a generated tweet is too long, it might attempt to shorten it automatically.

Manus AI fits within Level 2: The Workflow Coordinator Agent in the framework of AI agents we discussed. It exemplifies the characteristics of a Level 2 agent.

It effectively manages multi-step workflows, such as parsing and summarizing complex documents. For example, when tasked with processing a lengthy research paper, Manus can:

Plan by breaking the document into sections for analysis.

Select appropriate tools, like document parsers for extraction and NLP models for summarization.

Adapt its approach based on the document's structure or content type, responding to unexpected formats or errors.

Capability Rating (Illustrative):

Goal Orientation: ★★★☆☆ (Handles moderately complex goals with multiple parts)

Autonomy: ★★★☆☆ (Orchestrates a sequence of tool calls)

Planning: ★★★☆☆ (Generates relatively static, sequential plans)

Tool Use: ★★★☆☆ (Selects from a predefined set of known tools)

Adaptability: ★★☆☆☆ (Handles predictable errors, limited plan changes)

🔑 Key Takeaway:

Suitable for automating established business processes or workflows involving multiple known steps and different software tools/APIs.

It requires a clear goal definition and a predefined set of tools.

This is where we're seeing a lot of practical agent applications emerge today.

Relevant Tech: Frameworks designed for stateful, multi-step execution, like LangGraph, CrewAI, Microsoft Autogen, or platforms enabling custom agent creation often target this level.

Level 3: The Autonomous Strategist Agent 🧠 Complex Problem Solving Multi-Agent System (MAS)

What it is: This is the most advanced level, tackling complex, sometimes ambiguous, or strategic goals.

It requires dynamic planning (creating and modifying plans significantly based on new information), sophisticated reasoning, potentially learning from interactions, and often involves discovering/selecting from a wide range of tools.

It frequently operates as part of a Multi-Agent System (MAS), coordinating with other specialized agents.

The aim is high-quality, robust outcomes even in uncertain, dynamic environments.

Example: A "Competitive Intelligence & Strategy Agent."

Goal: "Continuously monitor key competitors (X, Y, Z) and the broader market landscape for our SaaS product category. Identify emerging threats and opportunities, and propose actionable strategic responses quarterly (e.g., product adjustments, pricing changes, M&A targets)." (Note the complexity and strategic nature).

Plan: Highly dynamic and iterative.

Involves:

1. Monitoring news feeds, social media, financial reports, patent filings (Tools: News APIs, Web Scraping Agents, Financial Data APIs, Patent DBs).

2. Analyzing competitor product updates/marketing (Tool: Website Change Trackers, Ad Platform APIs).

3. Synthesizing findings into threat/opportunity assessments (Tool: Analysis/Reasoning Module).

4. Generating potential strategic responses using business frameworks (Tool: Strategy Framework Models).

5. Evaluating feasibility/impact of responses (Tool: Financial Modeling Tools, Internal Data APIs).

MAS: Likely uses specialized sub-agents to scrape specific sites, perform deep financial analyses, or translate foreign market news. The primary agent acts as the orchestrator.

Adaptation: Re-prioritizes monitoring based on market shifts (e.g., a new startup gaining traction), deep-dives into unexpected competitor moves (e.g., a surprise acquisition), and refines strategic options based on internal feedback or simulated outcomes.

Reliability: To ensure rigor for critical outputs like M&A suggestions, it might use "Contract" principles—formal agreements on deliverables and validation criteria.

Capability Rating (Illustrative):

Goal Orientation: ★★★★★ (Handles complex, strategic, potentially ambiguous goals)

Autonomy: ★★★★★ (High degree of self-direction, initiative, complex decisions)

Planning: ★★★★★ (Dynamic, adaptive, potentially hierarchical planning)

Tool Use: ★★★★★ (Selects, potentially discovers, orchestrates diverse/complex tools)

Adaptability: ★★★★★ (Robust learning, self-correction, major strategy shifts)

🔑 Key Takeaway:

Aims to be a strategic partner, tackling complex problems with high autonomy. Requires significant investment, robust architecture (often MAS), sophisticated orchestration, and rigorous evaluation.

It is still primarily emerging in advanced R&D or highly specialized enterprise deployments, but it represents the long-term vision of agentic AI.

🤔 Debunking within Levels:

Be extremely wary of claims of Level 3 autonomy, especially in general-purpose tools.

True strategic reasoning, dynamic planning across diverse tools, and robust adaptation to novel situations are exceptionally hard engineering challenges.

If a tool claims L3 capabilities, demand evidence.

Ask to showcase how it handles unexpected situations and recovers from failures, not just pre-rehearsed "happy path" demos.

Often, what's marketed as L3 is a capable L2 agent with a good sales pitch.

How Agents Actually Work: A PM Primer ⚙️

Alright, we've established the what (Assistants vs. Agents) and the how much(Levels of Autonomy). Now, let's peel back the cover and look at the how.

As a Product Manager, having a mental model of the underlying architecture is crucial for understanding capabilities, limitations, complexity, and where things might break.

Think of it like understanding the basics of a car's engine, transmission, and steering system – you don't need to be a mechanic, but knowing how they work together helps you understand performance, fuel efficiency, and potential issues.

The Core Agent Architecture (Single Agent)

At its heart, a single AI agent combines intelligence (the Brain) with the ability to perceive and act (Tools), all directed by a strategy (Orchestration).

💡 The Brain (Model):

This is the core intelligence engine, typically a powerful Large Language Model (LLM) – think Gemini, GPT-4, Claude 3, or similar. The LLM provides the foundational capabilities: understanding natural language, reasoning, summarizing, generating text, and making decisions based on the information it has.

PM Lens: The choice and quality of the underlying LLM significantly impact the agent's reasoning power, language fluency, and potential for bias or hallucination.

A more capable model enables more complex agency, but might also be slower or more expensive.

🛠️ The Senses & Hands (Tools/APIs):

This is arguably the most critical differentiator between a mere chatbot and an actual agent. Tools are the agent's connection to the world outside the LLM's internal knowledge.

They allow the agent to:

Perceive: Read data from databases (e.g., execute SQL), check inventory levels, get the latest stock prices, search the web, read files (PDFs, spreadsheets), check calendar availability.

Act: Send emails, update CRM records (e.g., Salesforce API), book flights (e.g., travel API), execute code (e.g., run Python script), post to social media, control smart home devices, or even call other agents.

PM Lens: An agent is only as capable as the tools it can access.

Key PM responsibilities include defining the necessary tools, ensuring reliable API access, handling authentication, and managing tool usage costs.

Without relevant tools, even the smartest LLM can't perform meaningful actions in the real world.

🧭 The Strategy (Orchestration Layer):

This is the conductor of the orchestra, the logic that tells the Brain how and when to use the Tools to achieve the overarching Goal.

It's often the most complex part to build and get right.

Key functions include:

Planning: Taking the user's high-level goal (e.g., "Plan my trip") and breaking it down into a sequence of actionable steps (e.g., 1. Clarify budget/dates. 2. Search flights tool. 3. Search hotels tool. 4. Check reviews tool. 5. Propose itinerary).

Techniques range from simple prompting strategies like Chain-of-Thought (CoT) ("Think step-by-step") or ReAct (Reason+Act interleaved) to more sophisticated, dynamic planning algorithms for complex goals (Level 3).

Memory: Agents need context to function coherently. This includes short-term memory (what was just said, what tools were just used) and potentially long-term memory (user preferences learned over time, past successful plans, knowledge about specific tools).

Effective memory management prevents the agent from seeming forgetful or repeating itself.

Tool Selection & Use: Based on the current step in the plan, the orchestrator must decide which tool is the right one for the job (e.g., use the 'search_flights' API now, not the 'send_email' API).

It needs to format the request correctly for the chosen tool, execute the tool call (often via an API), and then parse the tool's response (which might be structured data, text, or an error message) so the Brain can understand the result and decide the following action.

Monitoring & Adaptation: This is crucial for robustness. The orchestrator needs to track progress.

Did the last tool call succeed? Did it return the expected information? Did the user interrupt with new instructions?

Based on this feedback loop, the orchestrator decides whether to continue the plan, retry a failed step (maybe with a different tool), ask the user for clarification ("Did you mean Paris, France or Paris, Texas?"), or fundamentally change the plan if the initial approach isn't working.

Frameworks such as MCP (Model, Context, Protocol) can enhance this orchestration layer. MCP allows agents to define their objectives better, understand their operational context, and establish a clear protocol for decision-making and task execution.

This structured approach facilitates more effective interaction with the environment and task completion, especially when combined with robust reasoning techniques.

Details on MCP here:

Model Context Protocol (MCP): The Universal Translator That Will Redefine our AI Products

In the last two weeks, your feeds have likely been flooded with announcements from Anthropic, Perplexity, and several other AI frontrunners—all mentioning "Model Context Protocol" or MCP. What appeared at first glance as another technical acronym is signaling one of the most significant shifts in how AI products will be built, compete, and scale in the …

Connecting Architecture to Agent Level

Stepping Up: Multi-Agent Systems (MAS)

For truly complex problems (like our Head Chef running the restaurant or the Competitive Intelligence Agent), building one single, monolithic agent that does everything becomes incredibly difficult, inefficient, and brittle.

Enter Multi-Agent Systems (MAS)

🤔 Why MAS? Think division of labor. Like a company with specialized departments (Sales, Marketing, Engineering), MAS uses a team of specialized agents. The benefits:

Specialization & Expertise: Each agent can be optimized for a specific task (e.g., a 'Data Analysis Agent', a 'Web Scraping Agent', a 'Report Writing Agent').

Modularity: Easier to develop, test, update, or replace individual agents without disrupting the whole system.

Scalability: Can add more agents to handle increased workload or new tasks.

Robustness/Fault Tolerance: If one specialized agent fails, the system might be able to route around it or use an alternative rather than crash the entire process.

⚙️ How it Works: Agents in an MAS need to communicate and coordinate. Common patterns include

Hierarchical: A "Manager" or "Orchestrator" agent breaks down the main goal and delegates sub-tasks to specialized "Worker" agents (like the Head Chef directing the Sous Chefs).

Collaborative/Sequential: Agents work together, perhaps passing the task along an "assembly line," with each agent adding its contribution (e.g., Agent 1 scrapes data, Agent 2 analyzes it, Agent 3 visualizes it).

Peer-to-Peer: Agents might hand off tasks directly if they realize another agent is better suited, providing resilience against initial misrouting.

🎯 The PM Challenge: Designing effective MAS isn't just about building individual agents.

It requires careful thought about:

How do agents communicate?

What information do they share?

Who defines the overall plan?

How are conflicts resolved?

How do you evaluate the system's performance, not just the parts?

It adds architectural complexity but unlocks the potential to solve much harder, multi-faceted problems.

Recent Advancements & Real-World Examples

The world of AI agents is moving incredibly fast. Here are some key trends and examples relevant as of early 2025, focusing on what enables more capable agents -

Sophisticated Orchestration Frameworks

Beyond simple scripting, frameworks like LangGraph [are maturing.

They allow developers to define agent interactions as graphs rather than linear chains.

This naturally supports cycles (plan -> act -> reflect -> re-plan), conditional logic, and more complex multi-agent collaborations, essential for building more robust Level 2 and Level 3 agents.

Enhanced Planning & Reasoning:

While basic ReAct and CoT are common, research actively pushes towards more robust planning.

This includes techniques where LLMs critique and refine their plans or methods that integrate ideas from classical AI planning to better handle complex dependencies and uncertainty.

The goal is agents that don't just follow steps but truly strategize.

Anthropic's Model Context Protocol (MCP)

MCP has emerged as a leading standard for AI agent interoperability. Open-sourced in late 2024, MCP connects AI assistants to systems where data lives, breaking down silos across enterprise environments. With OpenAI and Microsoft announcing support in early 2025, MCP is positioned to become the standard protocol allowing AI agents to communicate and negotiate across platforms.

Reliable Tool Use & Grounding

Making agents use tools reliably is paramount. Advancements focus on:

Better tool selection (choosing the right API from potentially hundreds).

Graceful error handling when APIs fail or return unexpected results.

Grounding agent responses firmly in the information retrieved from tools to reduce hallucination.

n8n's AI agent framework exemplifies progress in this area. Their platform has gained significant traction for its ability to connect AI models like GPT-4 to various tools, allowing the AI to select and use appropriate tools to fulfill requests.

Their visual builder makes creating complex AI agent workflows accessible without deep coding knowledge, and recent updates allow AI to automatically configure parameters, dramatically reducing setup time.

Agentic RAG is a prime example, where agents actively manage the search process itself—refining queries, selecting sources, even validating information—leading to much higher-quality retrieval than traditional RAG.

OpenAI's recent tooling advancements, including their Responses API, built-in tools, and Agents SDK with MCP support, demonstrate this growing focus on reliability. These tools provide more structured ways to build agents that can reliably execute complex tasks while maintaining appropriate guardrails.

Practical Multi-Agent Systems (MAS) Platforms:

We're moving beyond theory. Platforms are emerging, specifically designed to build, deploy, and manage MAS in enterprises.

Google Agentspace exemplifies this by providing infrastructure for coordinating fleets of specialized "Assistant" (user-facing) and "Automation" (background) agents, enabling the "Manager of Agents" paradigm.

Manus AI's architecture leverages this multi-agent approach by employing specialized sub-agents for tasks like travel planning, financial analysis, and code deployment. This allows it to execute a diverse range of tasks from creating personalized travel itineraries to analyzing stocks with visual dashboards and deploying code.

Other startups also use MAS principles to build platforms focused on specific verticals (e.g., software development, scientific research).

Intensified Focus on Reliability ("Contracts")

Realizing that current agents can be brittle has spurred interest in more formal approaches for high-stakes tasks (Level 3).

The concept of "Contracts" involves defining an agent's task with much greater precision: clear deliverables, measurable specs, scope boundaries, even allowing the agent to negotiate ambiguities before starting.

This aims for verifiable, reliable execution.

Maturing Agent Evaluation (Agent Evals & Ops)

Building agents without robust evaluation is like flying blind. The field of Agent Evaluations and Operations is rapidly developing tools and methodologies to assess agent performance systematically.

This includes evaluating not just the outcome but also the process (trajectory) the agent took, its tool usage efficiency, and its robustness to failure.

Automated evaluation using LLMs as judges and dedicated platforms like Vertex AI Eval Service are becoming essential parts of the agent development lifecycle

Your PM Agent Reality Check: The Litmus Test ✅ ❌

Okay, knowledge is power.

Now let's turn that understanding into a practical tool.

You will encounter countless claims about "AI Agents" – from vendors, startups, maybe even internal teams.

You need a way to quickly cut through the marketing fluff and assess the actual capability level being discussed or demonstrated.

Use this rigorous checklist. Ask these questions. Be skeptical. Demand evidence

AI Agent Reality Check (Can the system consistently demonstrate the ability to...)

🎯 Interpret & Pursue a GOAL (vs. just execute a command)?

✅ YES: Understands a desired end-state, even if slightly ambiguous, and initiates action towards it (e.g., "Find the best strategy to increase user retention," "Plan a marketing campaign for Product X," "Resolve this customer support issue"). (Potential Agent)

❌ NO: Requires explicit, step-by-step instructions for every single action or only understands very narrow, predefined commands (e.g., "Summarize this text," "Search Google for X," "Send email Y to Z"). (Likely Assistant)

PM Watch Out For: Systems needing excessive prompt engineering gymnastics to simulate goal pursuit. Proper goal orientation should feel more inherent to the system's design. Level Indication: Strong YES suggests potential Agent (L1-L3). Strong NO indicates Assistant.

🗺️ Generate its OWN Multi-Step PLAN to achieve the goal?

✅ YES: Decomposes the goal into a logical sequence of sub-tasks or actions, determining the how independently (e.g., figures out it needs to search, then analyze, then summarize, then format).

❌ NO: Follows a completely fixed, hardcoded script, relies entirely on a human-defined workflow template, or only performs single-step actions for each command.

PM Watch Out For: "Plans" that are just the LLM listing hypothetical steps it could take, without the orchestration actually executing that plan. Ask: "Can you show me the agent following that plan?". Level Indication: YES suggests L2 or L3 Agent. NO suggests Assistant or L1 Agent.

🔧 Select and Use Appropriate TOOL(s) from a diverse TOOLBOX?

✅ YES: Intelligently chooses the right tool (specific API, database query, function call, another agent) from a set of multiple, distinct options based on the current step in its plan. Can handle different tool inputs/outputs.

❌ NO: Only uses one specific tool for its core function, the available tools are trivial or functionally identical, or the human must explicitly tell it which tool to use for each step.

PM Watch Out For: Demos showing only one prominent tool being used. Ask: "What other tools does it have access to? How does it decide which one to use if multiple could be relevant?".

Level Indication: Strong YES (multiple, distinct tools with selection logic) suggests L2 or L3 Agent. NO suggests Assistant or L1 Agent.

🏃 Execute the plan AUTONOMOUSLY over multiple steps?

✅ YES: Proceeds through multiple steps of its plan, potentially involving different tools, without requiring human intervention, confirmation, or prompting at each intermediate stage to continue.

❌ NO: Stops after each step to wait for the next command, constantly requires explicit user approval ("OK to proceed?"), or cannot chain actions together without human guidance.

PM Watch Out For: Systems that narrate their steps but still need permission ("Now I will search for flights. Is that okay?"). True autonomy means proceeding towards the goal unless a critical failure or ambiguity requires intervention.

Level Indication: YES is a baseline requirement for any Agent (L1-L3). NO indicates Assistant.

🤔 ADAPT and Recover when things go wrong or change?

✅ YES: Demonstrates ability to handle common errors (e.g., API timeout, unexpected data format from a tool), unexpected information, or changing conditions by retrying, using an alternative tool, adjusting its plan, or proactively asking clarifying questions. Shows resilience and robustness.

❌ NO: Fails completely, loops indefinitely, produces nonsensical output, or stops and gives up when encountering deviations from the perfect "happy path." Lacks resilience.

PM Watch Out For: Demos that only showcase perfect scenarios. Actively probe failure modes: "What happens if this API call fails?", "What if the website it needs to be scraped changes format?", "What if the user gives contradictory information?". Robust adaptation is a key differentiator for more advanced, reliable agents.

Level Indication: Strong YES suggests a more robust L2 or L3 Agent. NO indicates a brittle Assistant or L1 Agent.

Interpreting the Results:

Scorecard: How many ✅s did the system realistically earn based on evidence?

0-1 ✅: Definitely an Assistant or simple automation. Calling it an "Agent" is a misleading marketing spin.

2-3 ✅ (esp. Goal + Autonomy): Might qualify as a basic Level 1 Task Executor Agent. Useful for specific automation, but limited flexibility. Manage expectations accordingly.

4 ✅ (esp. adding Plan + Tool Use): Likely a genuine Level 2 Workflow Coordinator Agent. Capable of automating moderately complex processes. This is where many practical enterprise agents are emerging.

5 ✅ (strong evidence for all, esp. Adaptation): Potentially a Level 3 Autonomous Strategist. This is rare and requires significant scrutiny. Demand strong evidence of dynamic planning, diverse tool use, and robust adaptation in novel situations.

Why This Clarity is Your PM Superpower (Connecting to Value & Profit) 🔑

Okay, we've gone deep into definitions, levels, and architectures. Why does all this matter so much for you, the Product Manager?

Because in the rapidly evolving world of AI, clarity isn't just nice to have – it's your strategic advantage. It's your path to building valuable, and ultimately, profitable products.

Here’s how understanding the Agent vs. Assistant distinction (and the different Agent Levels) becomes your superpower:

Think Bigger (Outcomes → Value → Profit):

Hype Trap: Focusing on features like "Add AI summary button."

Clarity Advantage: Shifting your mindset from features to valuable outcomes that agents can deliver. Instead of the button, you ask, "Can we build a Level 2 Agent whose goal is to 'Generate and distribute the draft quarterly sales report,' pulling data from Salesforce (Tool 1), analyzing trends (Tool 2), and drafting the summary email (Tool 3), saving our sales ops team 10 hours per report?" This focus on solving bigger user problems and delivering measurable business impact (time saved = cost reduction) is how you create products customers truly value and are willing to pay for.

Manage Reality (Scope, Cost, Risk → Profitability):

Hype Trap: Underestimating the effort required to build an "AI Agent" based on a flashy demo, leading to blown budgets and missed deadlines.

Clarity Advantage: Recognizing that building robust agents (especially Level 2 and Level 3) is significantly more complex and costly than building assistants or simple L1 agents.

You understand the need for sophisticated orchestration, reliable tool integrations, robust error handling, extensive testing, and ongoing evaluation (Agent Ops).

This clarity allows you to scope realistically ("Is this a 2-month L1 feature or a 9-month L3 strategic initiative?"), estimate Total Cost of Ownership (TCO) accurately (including compute, specialized engineering, data integration, monitoring), manage risks (reliability, safety, hallucination potential), and build a profitable business case. Overpromising based on hype leads to unprofitable products and technical debt.

Ask the Right Questions (Due Diligence → Smart Investment):

Hype Trap: Accepting vendor claims or internal proposals at face value ("Yes, it's an AI Agent!").

Clarity Advantage: Using the Levels framework and Litmus Test for rigorous due diligence. You ask probing questions:

"What Level of agent capability are we realistically aiming for/buying, and what's the concrete evidence?"

"What's the precise goal this agent achieves, and how will we measure its success and ROI?"

"What specific tools does it need? How reliable are they? How does it choose between them?"

"Show me how it plans and adapts to real-world failures, not just the happy path."

"What's the plan for reliability and validation, especially for high-stakes tasks (cf. 'Contracts'?"

"How will we evaluate this continuously in production (Agent Evals & Ops)? What are the key metrics?"

This rigor prevents costly mistakes and ensures you invest precious resources (time, money, people) wisely on initiatives with a real chance of success.

Build Trust & Differentiation (Not Gimmicks):

Hype Trap: Slapping the "AI Agent" label on a simple chatbot or summarizer, hoping users won't notice the difference.

Clarity Advantage: Understanding that users aren't stupid. Shipping an "Agent" that is clearly just a basic Assistant erodes trust instantly. Conversely, being honest about capabilities ("This is a Level 1 Task Executor that reliably automates X," or "This is a Level 2 Workflow Coordinator designed to handle Y") and delivering genuine, reliable autonomous value at the level you claim builds loyalty and differentiates your product.

Substance and reliability stand out in a market flooded with "AI-powered" fluff. Don't call a simple RAG chatbot an "Insight Agent" if it can't actually generate novel insights through planning and tool use.

Gain Strategic Advantage:

Hype Trap: Chasing every new AI trend, spreading resources thin, and delivering mediocre results.

Clarity Advantage: The PM who truly understands the nuances of agent capabilities can identify genuine, high-value opportunities others miss amidst the noise.

You can allocate resources more effectively, confidently navigate technical complexity, and guide your team to build differentiated, valuable AI products that solve real problems – the foundation of sustainable, profitable growth.

Conclusion: From Hype to High-Value, Profitable Products

So, where does this leave us? We've journeyed beyond the buzzwords, dissecting the crucial differences between reactive AI Assistants and proactive AI Agents.

We've seen that "Agent" isn't a monolith but a spectrum, ranging from simple Level 1 Task Executors to complex Level 3 Autonomous Strategists, often working together in Multi-Agent Systems.

You're now armed with a framework (The Levels) and a practical tool (The Litmus Test) to cut through the marketing slop and assess reality.

Actionable Steps for Building Valuable AI Products:

How do you translate this understanding into action?

Identify High-Value Problems: Forget the tech for a moment. Where are the biggest pain points, inefficiencies, or untapped opportunities for your users or business? Which of these could genuinely benefit from the autonomous, goal-driven capabilities of a Level 2 or Level 3 agent? Start with the outcome and work backward.

Apply Rigorous Evaluation (Internally & Externally): Use the Litmus Test and Levels framework relentlessly. Scrutinize internal proposals ("Are we being realistic about this L3 ambition?"). Challenge vendor claims ("Show me the evidence for adaptation"). Be skeptical. Demand proof.

Scope Realistically & Iterate: Acknowledge the true complexity and cost associated with the desired Agent Level. Is an L1 or L2 agent sufficient to deliver significant value now? Maybe start there, prove the value, learn, and iterate towards higher levels, rather than attempting a high-risk, complex L3 moonshot from day one. Factor in costs for development, tools, data, and ongoing evaluation (Agent Ops).

Measure What Matters: Define clear, measurable KPIs tied directly to the agent's intended goal and the resulting business impact (e.g., hours saved, error rate reduction, conversion rate increase, customer satisfaction improvement). Implement robust evaluation methods that track the final success rate and the quality of the agent's process (trajectory), efficiency, and cost.

Build Trust Through Honesty & Reliability: Be transparent about your agent's capabilities and limitations. Don't call something an "Autonomous Agent" if it constantly needs human hand-holding. Invest heavily in making the agent reliable and robust in real-world conditions. A dependable L2 agent consistently delivering value is far better than a flashy L3 concept that frequently fails.

Final Thought:

The agentic future of AI is exciting, brimming with potential. But it won't be built on flimsy marketing narratives or wishful thinking.

It will be forged by pragmatic, value-focused product leaders like you – leaders who possess the clarity to distinguish hype from reality, the rigor to demand evidence, and the strategic vision to guide their teams in building AI solutions that are not just intelligent but genuinely useful, reliable, and ultimately, profitable.

Let’s build reality, not hype.

For more such insightful articles, check out:

AI Product Management – Learn with Me Series

Welcome to my “AI Product Management – Learn with Me Series.”